Trying Different Probability Distributions for DLM Training and Seeing What Happens

Language models estimate the probability distribution over sequences of tokens. Meaning that given a piece of text , a language model can give it a number, say , therefore , which means that the sentence is assigned a probability of according to the model’s estimate of the underlying distribution of the training data.

They are are canonically autoregressive (almost all the popular ones are), in which the probability of a given piece of text is estimated according to the following expression

which is the joint conditional probability of each token conditioned on previously generated tokens. Now instead of conditioning on previously generated tokens and doing that over and over again, Diffusion Language Models use the product of marginal probabilities. Marginal probability here is defined as the probability of only one token given the context.

The context here is given by , which is a sequence with some masked tokens, leaving out a partially clean sequence. is the set of positions/indices of these mask tokens.

Both of them can be used in text generation. Given a prompt, autoregressive models can keep feeding the so far generated tokens as the conditional in the conditional probability and diffusion models by appending a chunk of masked tokens at the end of the prompt.

the problem

One of the challenges of DLM training is performance gap between diffusion and autoregressive. Arguably, this is mostly because autoregressive is conditioning on more and more tokens as it goes, that is, the context in the conditional probability gets bigger and bigger as it goes, whereas the partially clean sequence in DLMs stays constant (although there are remasking strategies that tries to mitigate this problem). However, at the same time, DLMs are faster because they are generating more tokens in a single pass instead of one by one like autoregressive.

changing the probability distributions

Many research endeavors have tried closing this gap by introducing new ways to train DLMs. In the same spirit, we are trying to see if the probability distribution from which tokens are masked would give us any performance gains or if there are any behaviors worth observing with respect to them. The idea of doing this came from the idea of how marginal probability can be obtained from different amount of contexts; we then ask

During training, how much masking leads too little context and how little masking leads to too many context such that it hurts generalization

methodology

Let our training minibatch be where is batch size and is token length. As our baseline, we adopt the DLM training method of LLaDa (Nie et al., 2025), which, for each sequence , we sample . And for each token we sample . Tokens are masked according to the following:

Where is the probability of a token being masked. Consequently, we have which is the number of tokens masked in a sequence.

but in our study…

In our study, instead of sampling , we define a set of expected masking probabilities

and, for each row in the training batch, we sample

where , , and , such that

We conduct separate experiments for each . Which means our masking function now follows

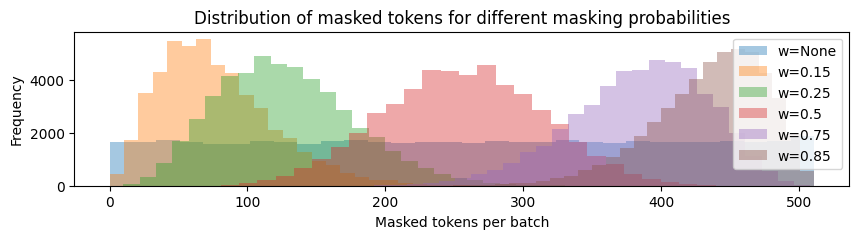

To illustrate this, we simulate masking for each with and sequences and draw a histogram ( is the default setup).

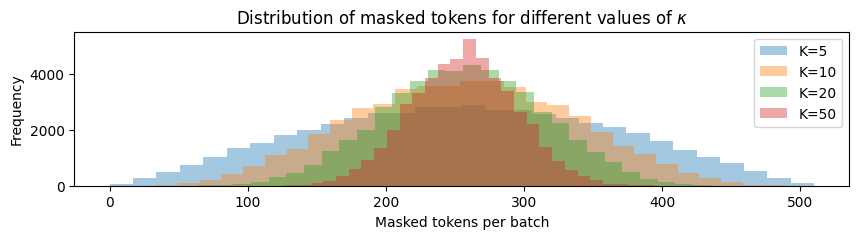

We also investigate the effect of the spread of the distribution of the masked tokens. Specifically, we control which affects the variance of

We also illustrate this using the same amount of and .

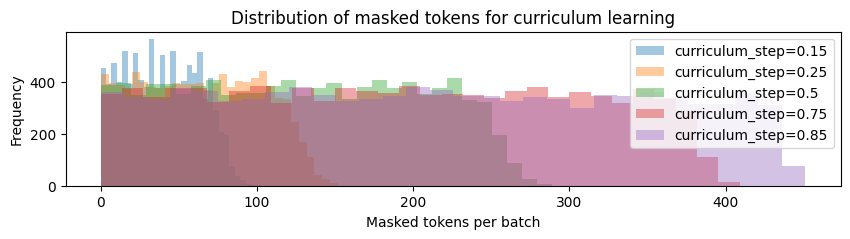

And finally, we also try to do a curriculum learning, in which the probability of masking changes depending on the training step. We set a curriculum such that the model learns to demask less tokens at the beginning but gradually changes to learning to demask more tokens. The intuition behind this is we are trying to see if a gradually shifting from easier to harder task would improve generalization. Specifically, we divide the training step budget by the number of curriculum step and use . We set them the same as in our ablation experiment for the expectation. We also illustrate this curriculum training distribution

results?!

masking sweet spot

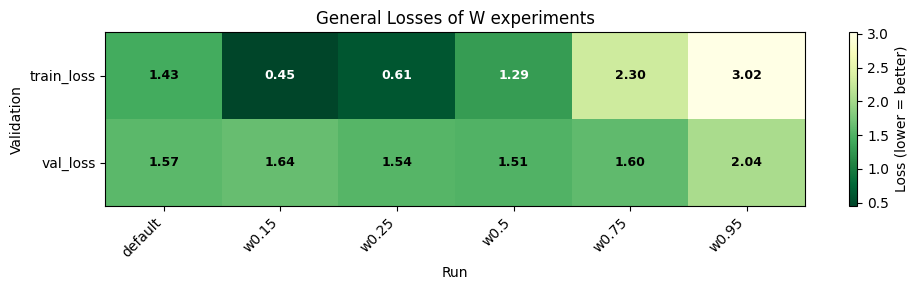

It is easier to demask small amount of tokens than many We found that that setting smaller (in this case and ) gets us the lowest training losses. This behavior is expected since, with a lower , the model is expected to demask less tokens, which is intuitively much easier to do than having to demask more tokens correctly. But when we look at the validation loss, we see that some masking distribution outperform the default distribution. We see this happening in and , whereas it has a lower validation loss than default

It is easier to demask small amount of tokens than many We found that that setting smaller (in this case and ) gets us the lowest training losses. This behavior is expected since, with a lower , the model is expected to demask less tokens, which is intuitively much easier to do than having to demask more tokens correctly. But when we look at the validation loss, we see that some masking distribution outperform the default distribution. We see this happening in and , whereas it has a lower validation loss than default

making sure

Yeah that’s great and all but is it really? now i’m not making any bold claims about if it’s a surefire hyperparameter that can make DLM training better, but we’ll look at different validation scenarios, that is, we validate it also on other masking probabilities in our experiments.

We see a more substantial decrease in validation loss (relative to default) when we test these models to demask tokens in a given sentence. Surprisingly, and outperforms the model at the validation scheme, suggesting models trained at and is good at generalizing to demask smaller amount of tokens, even better than models that was specifically trained to demask smaller amounts. However, in our experiments, this phenomenon does not hold beyond . training to demask more tokens as in and yields worse validation loss performance, suggesting that there is a diminishing return of the number of tokens to be demasked during training.

variating experiments

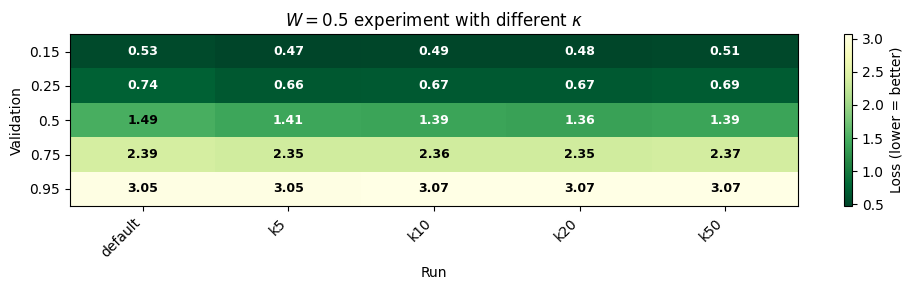

We have shown that and are potential sweet spots of masking probability in DLM training. We further investigated their variance (specifically, we choose ) to dissect this behavior further. We do not conclude any significant behavior when we variate the variance of . However, we see that all of the experiments with different variances all outperform the default distribution, further strengthening our previous hypothesis. This also suggests that expectiation is a more significant variable than variance when it comes to masking probability distribution.

We have shown that and are potential sweet spots of masking probability in DLM training. We further investigated their variance (specifically, we choose ) to dissect this behavior further. We do not conclude any significant behavior when we variate the variance of . However, we see that all of the experiments with different variances all outperform the default distribution, further strengthening our previous hypothesis. This also suggests that expectiation is a more significant variable than variance when it comes to masking probability distribution.

current curriculum learning worsens performance

We found that the our naive curriculum strategy worsens performance despite the intuition of learning to demask smaller amout of tokens in earlier steps would make learning to demask more tokens in later steps easier. Training loss that gradually increases instead of decreasing is expected, since the training task gets harder along with the training steps. However, we make no claims about the performance of curriculum learning in general since this phenomenon warrants further experimentation.

conclusions

We conclude with the suggestion that training DLMs to demask about work better than the default masking distribution. We further showed that variance in distribution doesn’t really matter. We also see that the current curriculum training doesn’t really work, but we can’t really say that it doesn’t work at all yet, so we still have all the reason to try other fancier way to do curriculum learning.

We release the code on https://github.com/rayendito/dlm_optim

acknowledgements

Many thanks to my colleagues and advisor: Erland Fuadi, Zayd Zuhri, and Dr. Alham Fikri Aji.

references

- Nie et al (2025), Large Language Diffusion Models

bibtex citation

@misc{diandaru2025dlmabl,

author = {Diandaru, Ryandito},

title = {Trying Different Probability Distributions for DLM Training and Seeing What Happens},

year = {2025},

howpublished = {\url{https://rayendito.github.io/posts/dlm_ablation}},

}